Neo4j Data Loader

Neo4j Data Loader performance improvement

Insights, updates, and technical articles from our team.

Neo4j Data Loader performance improvement

Model Context Protocol (MCP) lets AI agents call out to your own tools and services. This post walks through a minimal MCP server that returns the current EST time and how it plugs into modern AI workflows.

This article introduces how financial institutions can use Neo4j Graph Data Science (GDS) for centrality, community detection, graph embeddings, and graph‑native ML pipelines, with concrete Cypher and Python examples tailored to credit risk and fraud analytics.

“Discover how Everflow turns a Neo4j‑based credit risk graph into a production‑ready machine learning and GenAI platform, combining graph features, ML pipelines, and explainable AI for regulated financial institutions.”

Implementing internationalization (i18n) does not have to be heavy or complex; a simple, well-structured setup can take you a long way and is usually enough for an MVP or early-stage product.

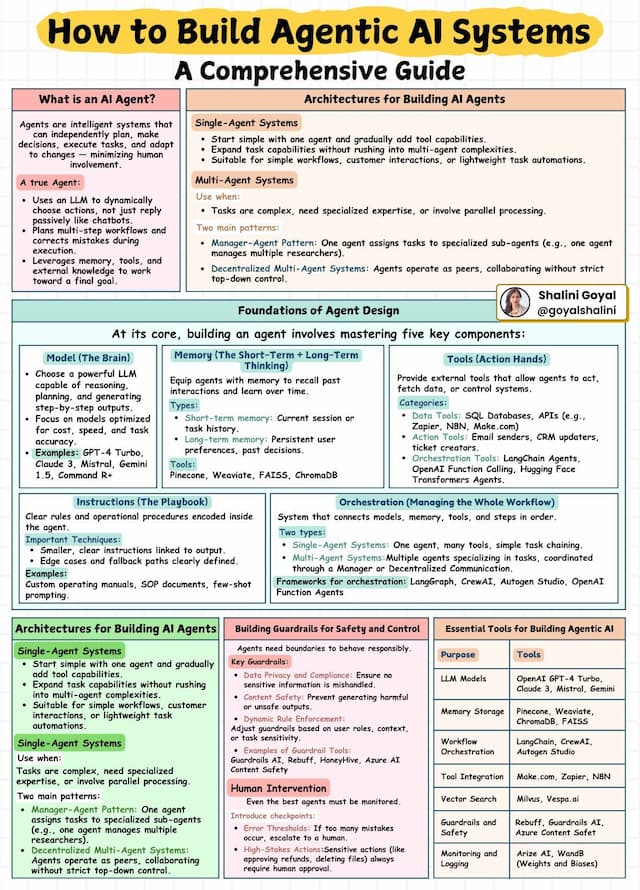

Learn how to design and orchestrate multiple AI agents into a seamless, end-to-end automated workflow. This article explores architecture, communication patterns, and real-world implementation strategies for multi-agent systems.

Most teams try to fight LLM hallucinations by “using a bigger model” or “adding RAG on top,” but the results are often inconsistent and fragile. The core issue is not only model quality, but the lack of a reliable, stateful workflow around the model: there is no explicit place to validate, correct, or safely route uncertain answers. LangGraph addresses this by modeling LLM applications as stateful graphs, where retrieval, generation, grading, self-correction, and human review become first-class nodes in a controlled process. This article explains why LangGraph is well suited for reducing hallucinations in production systems and outlines concrete design patterns you can adopt immediately.

Change Data Capture (CDC) on Snowflake enables low-latency, incremental data pipelines by tracking row-level changes instead of repeatedly scanning entire tables. By combining Snowflake Streams, Tasks, and (optionally) Dynamic Tables, teams can build CDC pipelines that are scalable, cost-efficient, and easy to operate. This article explains the core concepts behind Snowflake CDC, how it works under the hood, and what patterns and pitfalls data engineers should be aware of when designing real-time pipelines on Snowflake.